Designing One Medical’s First AI Assistant

Balancing a rapid launch with the need to build patient trust

I led the design of One Medical’s first AI assistant—adapting a generic Amazon model into a member-focused tool in under four months, without sacrificing patient trust.

“I want AI to guide me, but I need to trust it’s helping— not just giving generic advice.”

Highlights

🚀 4 Months Launch

We delivered the AI assistant swiftly, even under leadership pressure to move fast. By prioritizing patient trust and empathy, we avoided a rushed, sales-focused approach.

🤖 Pivot from Generic to Member-Centric

Adapting Amazon’s AI for One Medical required a fundamental shift away from upselling. Instead, we integrated a member support knowledge base so the AI could guide users with contextually relevant, empathetic answers.

⏱ Reducing Wait Times & Support Strain

Common inquiries were automated through the AI, freeing member support teams to focus on more complex cases. This change helped cut down multi-day response delays and gave members quicker solutions.

❤️ Validating Empathy and Trust

Diary studies confirmed members appreciated transparent disclaimers and safety checks. Nearly half of participants (49%) said they would reuse the AI, reflecting growing confidence in its reliability.

📖 Refining the Knowledge Base Continuously

We introduced a feedback tracker to improve clarity, cultural competence, and safety. Each new insight informed updates, ensuring the AI remained aligned with real member needs.

✨ Preparing for Personalized, Proactive Care

Post-launch research underscored the need for session memory and proactive health nudges—shifting from simple Q&A toward comprehensive preventive support that feels tailored to each patient.

The Challenge

Speedy Launch vs. Patient Trust

The Urgency

Leadership mandated a quick launch to keep up with competitors. However, the Amazon AI we inherited wasn’t built with empathy or One Medical’s brand values in mind—it pushed upsells rather than addressing members’ real concerns.

My Role

Led Design Strategy, Prototyping, Content & Visual Systems. (Includes contributing to user research, developing our new One Medical Member Support Knowledge Base, and co-contributing to our One Medical-specific Feedback Tracker).

Key Tensions

Trust vs. Speed: Could we deliver a robust AI in just four months without compromising user trust?

Amazon’s Generic Model: Upselling health services wasn’t right for One Medical’s approach to care.

Privacy & Safety: Members had legitimate concerns about data usage and accuracy in AI responses.

Trust-buster: Amazon AI focused on upselling products and Amazon Health services.

Building Empathy & Trust

Identifying what our members need

User Research Insights

Patients expected clear, actionable guidance.

They wanted disclaimers about AI’s limitations and how data is handled.

Quick access to care routing was a must to avoid days-long wait times for basic support.

From there, I defined five design tenets:

Earn Trust

Privacy by Design

Guided Choice

Thoughtful Innovation

Continuous Improvement

These helped us transform AI from a hard-sell tool to a genuine healthcare ally.

The Pivot: Transforming Amazon’s AI

Repurposing a Sales-Driven Model

Amazon’s assistant pushed upsells. Instead, I steered it toward reducing member support wait times and delivering real value—cutting from days to near-instant for common queries. I partnered with product, clinical, and support teams to build a One Medical–specific knowledge base.

Before

Amazon AI - Generic, non-member

After

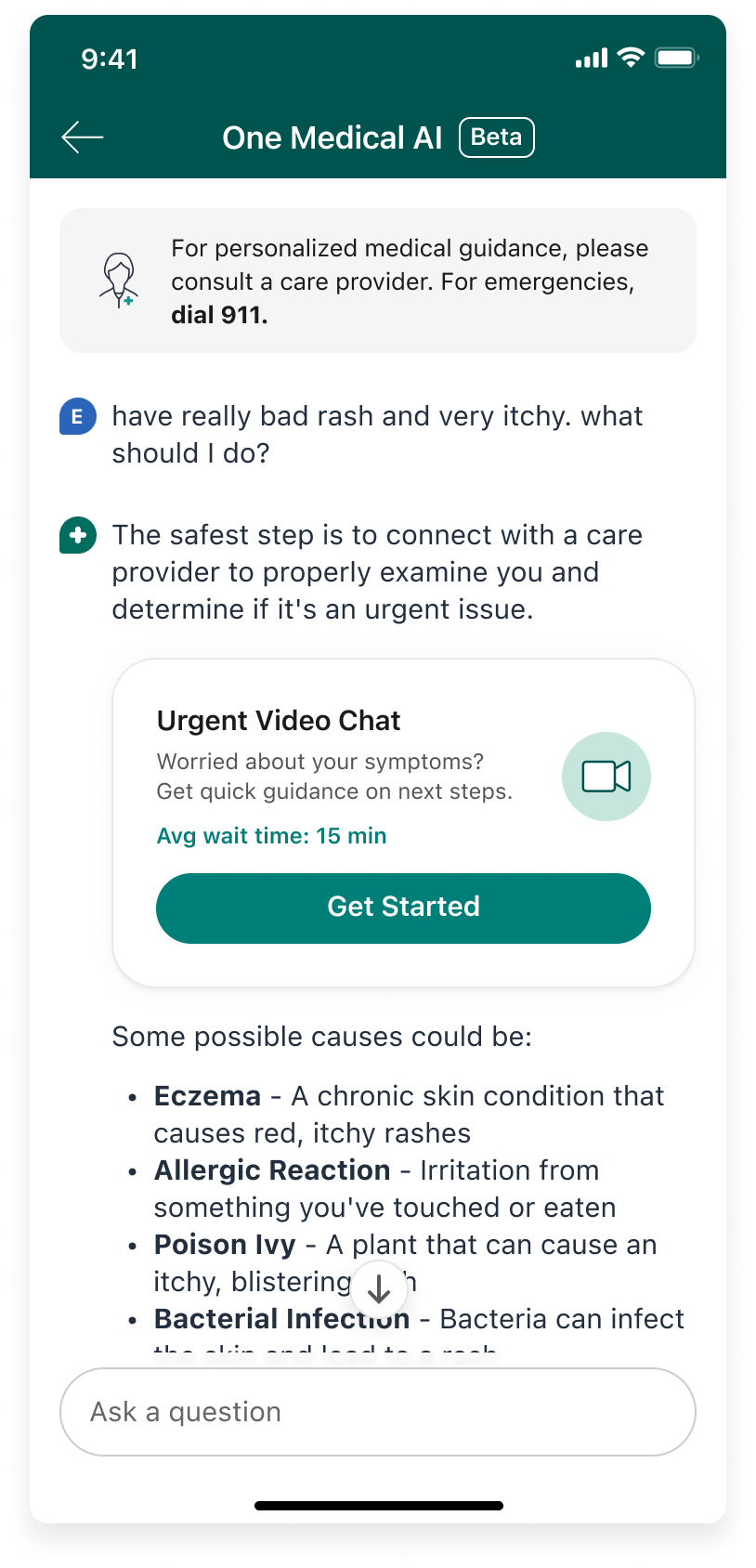

One Medical AI - Personalized, trust-focused

Shaping the Foundation: Knowledge Base & Continuous Feedback

To handle frequent member questions like membership renewals and lab results, I co-led the creation of a specialized knowledge base. Meanwhile, our feedback tracker captured user issues from both pre- and post-launch, allowing us to iterate in real time and ensure the AI’s responses stayed relevant and safe.

Crafting a One Medical–Centric Knowledge Base

I drew on my previous experience streamlining member support content:

Replaced generic upsell prompts with answers tailored to top One Medical queries (e.g., membership details, prescription refills, care navigation).

Collaborated closely with PMs, clinicians, and member support to capture the frequent pain points that caused 3-day wait times for basic help.

Structured topics so the AI could swiftly respond, alleviating repetitive questions and freeing support teams to handle complex cases.

By consolidating our existing FAQs and shaping them into a single source of truth, we set the foundation for real, empathetic guidance—fast.

Continuous Improvement via the Feedback Tracker

Even the best-curated knowledge base needs updates once real users start interacting. That’s why we introduced a structured Feedback Tracker from day one. Whenever the AI gave a confusing or inaccurate response, anyone on the team—clinical tech advisors, member support, or product managers—could log it.

We triaged these issues daily, then updated either the knowledge base or the AI’s response patterns. This process caught potential risks early (like missing allergy escalations) and ensured our assistant kept aligning with One Medical’s trust-first approach. Over time, we saw a marked improvement in user satisfaction and far fewer safety flags.

The Result

By combining a tailored knowledge base with a dynamic feedback process, we built a truly member-centric AI:

Real Impact: Cut down routine ticket volume significantly, letting support focus on urgent or nuanced questions.

Faster Iterations: Updates rolled out regularly, ensuring the AI adapted quickly to evolving needs.

Heightened Trust: Members felt their concerns were heard and addressed, thanks to the AI’s up-to-date, empathetic responses.

It wasn’t just about coding a chatbot. It was about ensuring the AI’s heart—its content—grew as our members’ needs grew, anchored by an ever-improving feedback system.

Original Response

Improved Response

Ensuring Trust Through Thoughtful Design

We explored four entry points, balancing leadership’s push for visibility with member needs. Ultimately, we chose a subtle but accessible approach (option 4)—prominent enough for quick help, yet not overshadowing core healthcare features.

Some other ways we fostered trust were:

Adding disclaimers clarifying AI limitations

Introducing emergency detection

Provided multiple possibilities instead of single diagnoses.

Members felt safer knowing it wasn’t overstepping into “doctor territory.”

-

Option 1

Top of Homescreen - High prominence

-

Option 2

Bottom Nav - Global Ingress

-

Option 3

Bottom Sheet - Peek View

-

Option 4

Recommended - Accessible, non-intrusive

What We Launched

We introduced an AI chat assistant directly within the One Medical app, designed to provide instant support and guidance for common healthcare needs.

As a fast follow, we added a secondary entry point within messaging—an "easter egg" for members who naturally turn to messaging for support. This strategic placement allows us to test whether embedding AI in familiar workflows drives higher engagement and reduces support strain.

Try the Prototype

Explore how the AI assistant helps members navigate their care. Note: This is a simplified demo, best viewed on desktop web—functionality is limited compared to what we launched.

Scroll down to the ‘One Medical AI’ section

Click ‘What is Treat Me Now’ to view the chat experience

Post-Launch Insights & Next Steps

Where we succeeded

According to our post-launch diary studies, many members noted the AI’s ease of use and simple language, noting that it felt helpful without overselling. Here are its top strengths:

1. Reduced Support Volume, Faster Responses

“I didn’t have to wait days for a response anymore—the AI gave me helpful suggestions right away”

2. Establishing Trust Through Transparency

“The disclaimer about not being able to provide recommended or personalized medical advice made me feel like the information was trustworthy...”

3. Delivering Clear, Understandable Information

“It performed well in outlining information in an easy-to-digest manner… it was able to provide helpful links and bolded text where necessary.”

4. Seamless Integration with One Medical's Care Model

“Having the schedule appointment link right there is super helpful, versus navigating the app.”

5. Providing a Helpful First Step Before Care

“I may ask the AI about symptoms before getting on with a telenurse to try one of their remedies first.”

6. Validating Patient Knowledge and Offering Reassurance

“I was pleasantly surprised and felt validated and reassured when the symptoms mirrored what I already thought.”

Where Members Want More

Despite streamlining routine questions, members felt the AI could become even more personal and proactive. They wanted the assistant to factor in past questions, suggest timely follow-ups, and integrate with their health data. Here are some future opportunities:

1. Contextual Memory: Retain past conversations so members don’t have to repeat themselves.

“It did not seem to use the personalization info I was providing, like my age… it should be able to refer back to information I’ve previously provided.”

2. Proactive Health Nudges: Suggest preventive steps or follow-up tests, going beyond reactive Q&A.

“Thinking about my lifestyle choices, what I’m eating, how I’m exercising, making sure I’m getting enough sleep—I think AI could really help with that.”

3. Privacy Assurances: Clarify how data is protected to reinforce trust in a healthcare setting.

“I want you to reassure me that you have my best interests in mind as a patient in need of healthcare. Show me where my data goes and how it’s used.”

Looking Ahead

By anchoring on empathy, usability, and iterative design, we proved AI can be launched swiftly without compromising patient trust. Moving forward, we’re focusing on deeper personalization and proactive engagement—continuing our commitment to a truly patient-centered care experience.

Lessons in Design Leadership

Balancing Speed & Empathy: A 4-month timeline doesn’t have to compromise member trust when design is guided by user research.

Iterative Feedback: Pre- and post-launch trackers made immediate response improvements possible, building credibility over time.

Cross-Functional Alignment: Regular communication with clinical, product, and engineering teams shaped a holistic, safe experience.

Members Seek Genuine Value: If the AI doesn’t feel relevant or personalized, people turn back to Google or standard support channels.